ChatGPT在做什么...为什么它会有效?(八)

The Concept of Embeddings

嵌入(Embeddings)的概念

Neural nets—at least as they’re currently set up—are fundamentally based on numbers. So if we’re going to to use them to work on something like text we’ll need a way to represent our text with numbers. And certainly we could start (essentially as ChatGPT does) by just assigning a number to every word in the dictionary. But there’s an important idea—that’s for example central to ChatGPT—that goes beyond that. And it’s the idea of “embeddings”. One can think of an embedding as a way to try to represent the “essence” of something by an array of numbers—with the property that “nearby things” are represented by nearby numbers.

神经网络 - 至少按目前的设置而言 - 基本上是基于数字的。因此,如果我们要用神经网络来处理文本这样的东西,我们就需要一种将文本用数字来表示的方法。当然,我们可以从为字典中的每个单词分配一个数字开始(就像ChatGPT一样)。但是,有一个重要的思想(例如它对ChatGPT至关重要),超越了这个基础。这个想法就是“嵌入”(embeddings)。我们可以将嵌入看作是一种尝试用一组数字来表示某些东西的“本质”的方法,使得“相邻的事物”由相邻的数字来表示。

PS:计算机的世界中只有0和1两个数字,如何使用0和1表示出不同的文字,最简单的方法就是利用一个映射表把数字和文字关联起来。例如,用1代表“你”,2代表“好”,3代表“世”,4代表“界”,这样计算机眼中的1234就是我们眼中的“你好世界”。相关内容可以阅读我的另一篇文章,《如何只用0和1创造全世界?》。词嵌入的思想,除了使用数字代表文字之外,还能反应出文字之间的关系。例如,“你”、“好”两个字要比“你”、“界”两个字关系更近,因为“你好”可以组成一个有意义的词组。那现在就可以用1代表“你”,2代表“好”,10000代表“界”,这样数字的距离就能反应出文字之间的距离。

And so, for example, we can think of a word embedding as trying to lay out words in a kind of “meaning space” in which words that are somehow “nearby in meaning” appear nearby in the embedding. The actual embeddings that are used—say in ChatGPT—tend to involve large lists of numbers. But if we project down to 2D, we can show examples of how words are laid out by the embedding:

因此,例如,我们可以将词嵌入看作是在某种“意义空间”中排列单词的尝试,在该空间中,某种程度上“意义相近”的单词会离的更近。实际使用的嵌入(例如在ChatGPT中)往往涉及大量的数字列表。但是,如果我们将其投影到2维空间,我们就可以展示出单词在嵌入中的布局示例:

PS:可以看见左上角是一些代表动物的单词,所以彼此靠的更近。右下角是一些代表水果的单词,所以彼此靠的更近。而动物和水果之间关系不大,因此被分隔在两个对角。

And, yes, what we see does remarkably well in capturing typical everyday impressions. But how can we construct such an embedding? Roughly the idea is to look at large amounts of text (here 5 billion words from the web) and then see “how similar” the “environments” are in which different words appear. So, for example, “alligator” and “crocodile” will often appear almost interchangeably in otherwise similar sentences, and that means they’ll be placed nearby in the embedding. But “turnip” and “eagle” won’t tend to appear in otherwise similar sentences, so they’ll be placed far apart in the embedding.

是的,我们所看到的内容确实与我们典型的日常印象很相似。但是,我们如何才能构建这样一个嵌入?大致的想法是查看大量文本(这里是来自网络的50亿个单词),然后看看不同单词出现的“环境”有多相似。例如,“alligator”和“crocodile”在其他类似的句子中经常几乎可以交换使用,这意味着它们将在嵌入中被放在相近的位置。但是,“turnip”和“eagle”通常不会在其他类似的句子中出现,因此它们将在嵌入中被放在很远的地方。

But how does one actually implement something like this using neural nets? Let’s start by talking about embeddings not for words, but for images. We want to find some way to characterize images by lists of numbers in such a way that “images we consider similar” are assigned similar lists of numbers.

但是,如何使用神经网络实际实现这样的嵌入呢?让我们首先谈论一下不是单词嵌入,而是图像嵌入。我们希望找到某种方法,通过数字列表来描述图像,使 "我们认为相似的图像" 被分配到相似的数字列表中。

How do we tell if we should “consider images similar”? Well, if our images are, say, of handwritten digits we might “consider two images similar” if they are of the same digit. Earlier we discussed a neural net that was trained to recognize handwritten digits. And we can think of this neural net as being set up so that in its final output it puts images into 10 different bins, one for each digit.

我们如何判断是否应该“认为图像相似”呢?好吧,假设我们的图像是手写数字,那么如果它们是相同的数字,我们可能会“认为两个图像是相似的”。早些时候,我们讨论了一个被训练用于识别手写数字的神经网络。我们可以将这个神经网络设置为,在其最终输出中将图像放入10个不同的箱子中,每个数字一个箱子。

But what if we “intercept” what’s going on inside the neural net before the final “it’s a ‘4’” decision is made? We might expect that inside the neural net there are numbers that characterize images as being “mostly 4-like but a bit 2-like” or some such. And the idea is to pick up such numbers to use as elements in an embedding.

但是,如果我们在做出“它是一个‘4’”的最终决定之前,“拦截”神经网络内部的操作呢?我们可能会预期,在神经网络的内部,有一些数字将图像描述为“大部分是‘4’,但有一点像‘2’”或者类似的数字。我们的想法就是捕捉这样的数字,将它们用作嵌入中的元素。

So here’s the concept. Rather than directly trying to characterize “what image is near what other image”, we instead consider a well-defined task (in this case digit recognition) for which we can get explicit training data—then use the fact that in doing this task the neural net implicitly has to make what amount to “nearness decisions”. So instead of us ever explicitly having to talk about “nearness of images” we’re just talking about the concrete question of what digit an image represents, and then we’re “leaving it to the neural net” to implicitly determine what that implies about “nearness of images”.

因此,概念就是。我们不直接尝试描述“哪个图像接近哪个其他图像”,而是考虑一个定义明确的任务(上面的案例是数字识别),我们可以得到明确的训练数据,然后利用这样一个事实:在执行这个任务时,神经网络隐含地必须做出“图像接近程度”的事情。因此,我们不必明确地谈论“图像的接近程度”,而只是在谈论图像中是哪个数字的具体问题,然后“将其交给神经网络”来隐含地确定“图像的接近程度”。

PS:上面内容读起来有点绕,想要说明的东西其实并不复杂。我们可以用一堆数字来代表任意一张图片。在数字图片识别任务中,我们希望神经网络可以将代表原始图片的这堆数字,转换成能分辨出图片中的数字是几的一堆数字。举一个不太恰当的例子,你可以想象成将一张模糊的数字图片,慢慢转变成一张清晰地数字图片的过程。越是执行到最后一步,图片中的数字就越清晰,它就越接近一个具体的数字,例如是数字4。但是在这之前的步骤中,由于图片还比较模糊,它看上去基本上是‘4’,但又有点像‘2’,说明数字图像‘4’和数字图像‘2’是比较相似的,我们就可以把这一步得到的一堆数字,作为数字图片的嵌入。

So how in more detail does this work for the digit recognition network? We can think of the network as consisting of 11 successive layers, that we might summarize iconically like this (with activation functions shown as separate layers):

那么,更详细地说,这个数字识别网络是如何工作的呢?我们可以将网络看作由11个连续的层组成,我们可以用这种方式对其进行图标化总结(其中激活函数显示为单独的层):

At the beginning we’re feeding into the first layer actual images, represented by 2D arrays of pixel values. And at the end—from the last layer—we’re getting out an array of 10 values, which we can think of saying “how certain” the network is that the image corresponds to each of the digits 0 through 9.

一开始,我们将实际图像表示为像素值的2维数组,将数组输入到第一层中。最后,从最后一层输出一个包含10个值的数组,我们可以认为这个数组表示网络对图像与数字0到9之间的对应关系的确定程度。

将数字图像4(上图image单词后面的4)输入后,最后一层神经元的值为:

In other words, the neural net is by this point “incredibly certain” that this image is a 4—and to actually get the output “4” we just have to pick out the position of the neuron with the largest value.

换句话说,神经网络此时已经“非常确定”这个图像是一个4,为了得到输出“4”,我们只需要找出具有最大值的神经元的位置。

PS:上图中的十个数字表示神经网络认为图片中的数字是0~9的概率值(概率区间0~1)。第一个数字代表图片是0的概率,第二个数字代表图片是1的概率,依次类推。可以看见,第五个数字概率为1,说明神经网络100%确定图片中的数字是4。

But what if we look one step earlier? The very last operation in the network is a so-called softmax which tries to “force certainty”. But before that’s been applied the values of the neurons are:

但如果我们再早一步看呢?神经网络中最后一步操作是一个所谓的softmax,它试图“强制确定性”。但在此之前,神经元的值是:

The neuron representing “4” still has the highest numerical value. But there’s also information in the values of the other neurons. And we can expect that this list of numbers can in a sense be used to characterize the “essence” of the image—and thus to provide something we can use as an embedding. And so, for example, each of the 4’s here has a slightly different “signature” (or “feature embedding”)—all very different from the 8’s:

代表 "4" 的神经元仍然有最高的数值。但是其他神经元的数值也包含信息。我们可以期望这个数字列表在某种意义上可以用来描述图像的“本质”,从而提供一些我们可以用作嵌入的东西。因此,例如,这里的每个4都具有稍微不同的“签名”(或“特征嵌入”)- 与 8 完全不同:

Here we’re essentially using 10 numbers to characterize our images. But it’s often better to use much more than that. And for example in our digit recognition network we can get an array of 500 numbers by tapping into the preceding layer. And this is probably a reasonable array to use as an “image embedding”.

在这里,我们基本上是用10个数字来描述我们的图像特征。但通常情况下,使用比这多得多的数字会更好。例如,在我们的数字识别网络中,通过访问前面的层,我们可以获得一个包含500个数字的数组。这可能是一个合理的用作“图像嵌入”的数组。

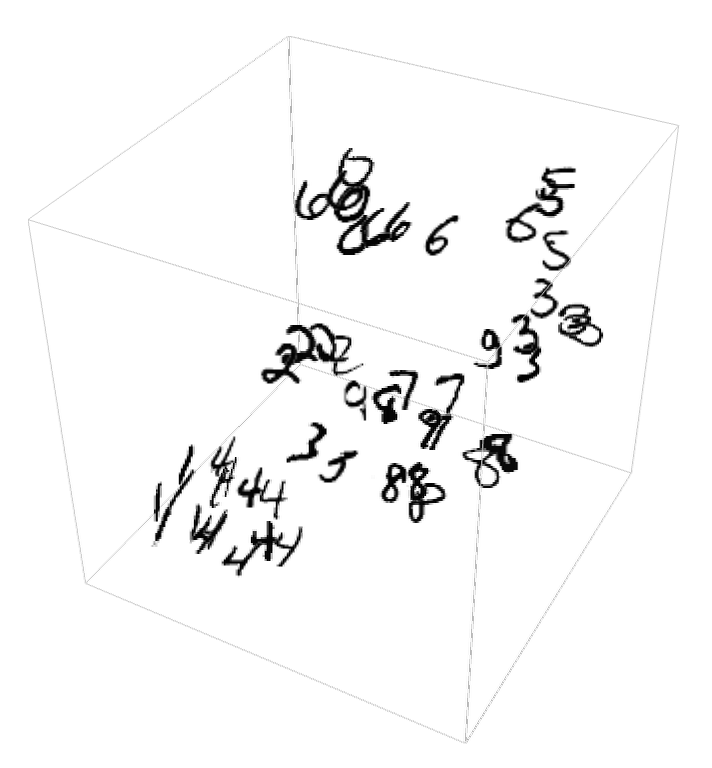

If we want to make an explicit visualization of “image space” for handwritten digits we need to “reduce the dimension”, effectively by projecting the 500-dimensional vector we’ve got into, say, 3D space:

如果我们想对手写数字的 "图像空间 "进行明确的可视化,我们需要通过将我们得到的500维向量投影到三维空间中来“减少维度”:

We’ve just talked about creating a characterization (and thus embedding) for images based effectively on identifying the similarity of images by determining whether (according to our training set) they correspond to the same handwritten digit. And we can do the same thing much more generally for images if we have a training set that identifies, say, which of 5000 common types of object (cat, dog, chair, …) each image is of. And in this way we can make an image embedding that’s “anchored” by our identification of common objects, but then “generalizes around that” according to the behavior of the neural net. And the point is that insofar as that behavior aligns with how we humans perceive and interpret images, this will end up being an embedding that “seems right to us”, and is useful in practice in doing “human-judgement-like” tasks.

我们刚刚讨论了如何通过确定(根据我们的训练集)图像是否对应于相同的手写数字,来有效地识别图像的相似性,由此为图像创建特征(嵌入)。如果我们有一个训练集,例如确定每个图像是5000种常见对象(如猫、狗、椅子等)的哪一个,那么我们也可以对这些图像做同样的事情。通过这种方式,我们可以创建一个以我们对常见对象的识别为“锚点”的图像嵌入,然后根据神经网络的行为“泛化”它。关键在于,只要这种行为与我们人类感知和解释图像的方式一致,这将成为一个“看起来对我们正确”的嵌入,并在实践中做“类似于人类判断”的任务时有用。

OK, so how do we follow the same kind of approach to find embeddings for words? The key is to start from a task about words for which we can readily do training. And the standard such task is “word prediction”. Imagine we’re given “the ___ cat”. Based on a large corpus of text (say, the text content of the web), what are the probabilities for different words that might “fill in the blank”? Or, alternatively, given “___ black ___” what are the probabilities for different “flanking words”?

好的,那么我们如何采用类似的方法来寻找单词的嵌入呢?关键在于从一个与单词相关,并且我们可以随时进行训练的任务开始。而标准的这样的任务是“单词预测”。想象一下,我们有“the ___ cat”。基于一个大型文本语料库(比如网络上的文本内容),可能用哪些不同的单词来“填空”?或者,换句话说,对于“___ black ___”,不同的“两侧单词”的概率是多少?

How do we set this problem up for a neural net? Ultimately we have to formulate everything in terms of numbers. And one way to do this is just to assign a unique number to each of the 50,000 or so common words in English. So, for example, “the” might be 914, and “ cat” (with a space before it) might be 3542. (And these are the actual numbers used by GPT-2.) So for the “the ___ cat” problem, our input might be {914, 3542}. What should the output be like? Well, it should be a list of 50,000 or so numbers that effectively give the probabilities for each of the possible “fill-in” words. And once again, to find an embedding, we want to “intercept” the “insides” of the neural net just before it “reaches its conclusion”—and then pick up the list of numbers that occur there, and that we can think of as “characterizing each word”.

那么,我们如何对这个问题进行神经网络的建模呢?归根结底,我们必须用数字来表述一切。一种方法是为英语中的大约 50,000 个常用单词分配一个唯一的数字。例如,“the” 可能是 914,“ cat”(带有空格)可能是 3542。(这些数字是 GPT-2 实际使用的数字。)因此,对于“the ___ cat”的问题,我们的输入可能是 {914,3542}。输出应该是什么样子的呢?它应该是一个包含大约 50,000 个数字的列表,有效地给出了每个可能的“填空”单词的概率。与之前寻找嵌入一样,我们要“截取”神经网络在“达到结论之前”的“内部”操作 - 然后捕捉出被截取处的数字列表,我们可以将其视为“描述每个单词”的数字。

OK, so what do those characterizations look like? Over the past 10 years there’ve been a sequence of different systems developed (word2vec, GloVe, BERT, GPT, …), each based on a different neural net approach. But ultimately all of them take words and characterize them by lists of hundreds to thousands of numbers.

好的,那这些特征长什么样呢?在过去的10年里,人们已经开发了一系列不同的系统(例如word2vec、GloVe、BERT、GPT、...),每个系统都采用了不同的神经网络方法。但最终,它们都通过数百到数千个数字的列表来描述单词。

In their raw form, these “embedding vectors” are quite uninformative. For example, here’s what GPT-2 produces as the raw embedding vectors for three specific words:

这些“嵌入向量”在原始形式下的信息量并不大。例如,下面是GPT-2产生的三个特定单词的原始嵌入向量:

If we do things like measure distances between these vectors, then we can find things like “nearnesses” of words. Later we’ll discuss in more detail what we might consider the “cognitive” significance of such embeddings. But for now the main point is that we have a way to usefully turn words into “neural-net-friendly” collections of numbers.

如果测量一下这些向量之间的距离,那么我们就可以找到类似于单词的 "近似度"的东西。稍后我们将更详细地讨论这种嵌入的 "认知"意义。但现在重要的是,我们有了一种方法可以有效地将单词变成 "神经网络友好"的数字集合。

But actually we can go further than just characterizing words by collections of numbers; we can also do this for sequences of words, or indeed whole blocks of text. And inside ChatGPT that’s how it’s dealing with things. It takes the text it’s got so far, and generates an embedding vector to represent it. Then its goal is to find the probabilities for different words that might occur next. And it represents its answer for this as a list of numbers that essentially give the probabilities for each of the 50,000 or so possible words.

但实际上,我们可以更进一步,不仅仅是通过数字的集合来描述单词;还可以用来描述单词的序列,或者是用来描述整个文本块。在ChatGPT内部,它就是这样处理的。它把目前得到的文本,生成一个嵌入向量来表示它。然后,它的目标是找到接下来可能出现的不同单词的概率。它将这个答案表示为一个数字列表,该列表基本上给出了50,000个左右的可能单词的概率。

(Strictly, ChatGPT does not deal with words, but rather with “tokens”—convenient linguistic units that might be whole words, or might just be pieces like “pre” or “ing” or “ized”. Working with tokens makes it easier for ChatGPT to handle rare, compound and non-English words, and, sometimes, for better or worse, to invent new words.)

(严格来说,ChatGPT 并不处理单词,而是处理“token”——一种方便的语言单位,可以是完整单词,也可以是像“pre”、“ing”或“ized”这样的单词的部分。使用token使得ChatGPT更容易处理罕见、复合和非英语的单词,并且有时会无论好坏的创造新词。)

PS:到此为止的所有文章,主要介绍了ChatGPT中所使用的神经网络和词嵌入的相关知识,这些方法也被使用于ChatGPT以外的其他机器学习任务中。

这篇文章是我在网上看见的一篇关于ChatGPT工作原理的分析。作者由浅入深的解释了ChatGPT是如何运行的,整个过程并没有深入到具体的模型算法实现,适合非机器学习的开发人员阅读学习。

作者Stephen Wolfram,业界知名的科学家、企业家和计算机科学家。Wolfram Research 公司的创始人、CEO,该公司开发了许多计算机程序和技术,包括 Mathematica 和 Wolfram Alpha 搜索引擎。

本文先使用ChatGPT翻译,再由我进行二次修改,红字部分是我额外添加的说明。由于原文很长,我将原文按章节拆分成多篇文章。想要看原文的朋友可以点击下方的原文链接。

https://writings.stephenwolfram.com/2023/02/what-is-chatgpt-doing-and-why-does-it-work/

如果你想亲自尝试一下ChatGPT,可以访问下图小程序,我们已经在小程序中对接了ChatGPT3.5-turbo接口用于测试。

目前通过接口使用ChatGPT与直接访问ChatGPT官网相比,在使用的稳定性和回答质量上还有所差距。特别是接口受到tokens长度限制,无法进行多次的连续对话。

如果你希望能直接访问官网应用,欢迎扫描下图中的二维码进行咨询,和我们一起体验ChatGPT在学习和工作中所带来的巨大改变。

0条留言